Okay, so here’s the deal. I’ve been messing around with the NYT Crossword a bunch lately, trying to see what I could dig up, data-wise. It started as a totally random idea, just kinda popped into my head one day while I was staring at the daily puzzle.

First thing I did was figure out how to actually get the data. I wasn’t about to manually copy clues and answers every day – that’s a recipe for instant burnout. So, I hunted around online and found some existing APIs and libraries that could grab the crossword data. There were a few options, but I ended up settling on one that seemed relatively stable and easy to use. Got it all set up with some Python scripts, and boom, data was flowing.

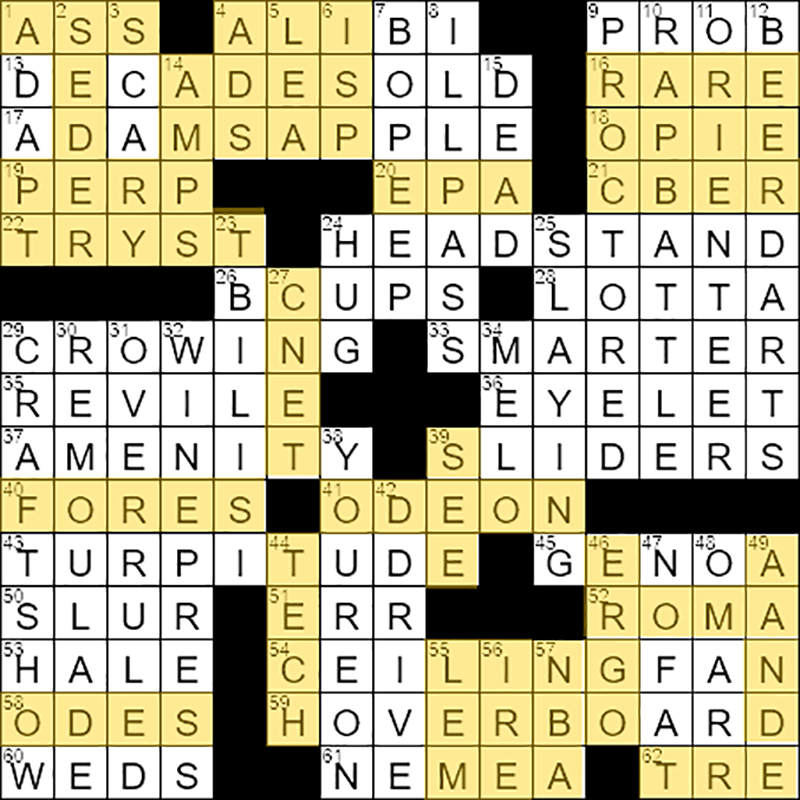

Next up: figuring out what to do with all this crossword info. I started simple. I wanted to see what the most common words were, both in the clues and in the answers. I wrote some more scripts to clean up the text (removing punctuation, converting everything to lowercase, the usual stuff) and then counted the word frequencies. It was kinda cool to see what words popped up the most. “Area,” “Era,” “Ale,” that kinda thing. Nothing earth-shattering, but it was a start.

Then I got a little more ambitious. I started looking at clue-answer pairs. I wondered if there were any patterns or relationships between the words used in the clues and the words used in the answers. I tried some basic natural language processing techniques, like calculating the similarity between the clue and answer text using cosine similarity. The results were…mixed. Sometimes it worked, sometimes it didn’t. Turns out, crosswords are pretty tricky when it comes to natural language. There’s a lot of wordplay and indirect references that are hard for a computer to understand.

After that, I experimented with trying to predict the answer based on the clue. I trained a simple machine learning model (nothing fancy, just a basic neural network) on a bunch of clue-answer pairs. The idea was to give it a clue and see if it could guess the answer. Again, the results were not amazing. The model could sometimes get the easy answers right, but it struggled with anything even remotely challenging. I realized pretty quickly that I was going to need a lot more data and a much more sophisticated model to get any real accuracy.

I also tried analyzing the difficulty of the crosswords over time. I figured there must be some way to quantify how hard a puzzle is. I looked at things like the average word length, the number of obscure words, and the number of times a particular word had appeared in the past. I couldn’t come up with a single perfect metric for difficulty, but I did find some interesting trends. For example, it seemed like the Sunday crosswords tended to have longer words and more obscure clues, which makes sense.

Eventually, I kinda ran out of steam. I had explored a bunch of different avenues, but I hadn’t really found any groundbreaking insights. It was still a fun project, though. I learned a lot about data analysis, natural language processing, and the inner workings of crosswords. And who knows, maybe I’ll revisit it someday with some new ideas.

The biggest takeaway? Crosswords are way more complicated than they look. There’s a lot of human ingenuity and creativity that goes into creating those puzzles, and it’s hard to capture that with just data and algorithms. But hey, it was worth a shot!