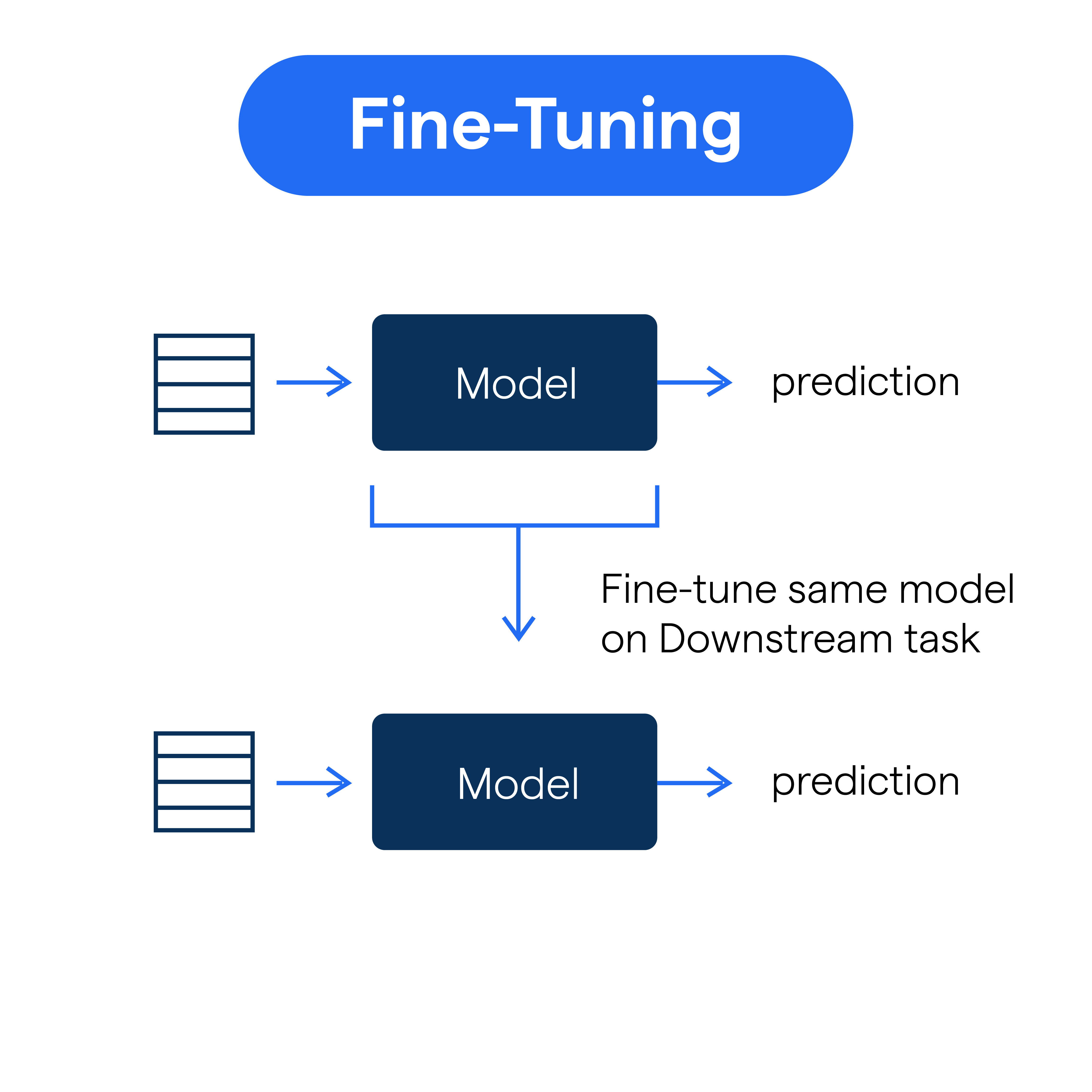

Alright, so I decided to try my hand at fine-tuning that “nyt” thing everyone’s been talking about. I’m not gonna lie, it seemed a bit daunting at first, but I figured, why not give it a shot? I’ve been keeping notes on everything I do, so here’s how it all went down.

Getting Started

First things first, I needed to, you know, actually get the stuff I needed. I already had the basic setup ready.

Then came the preparation of my dataset. This took a bit of time because I wanted something relevant and not just random. I found what I need and began shaping it up.

- Made it all into the right format.

- Cleaned up any weird bits and pieces.

- Split the data into training, testing, and validation sets. Basic stuff.

The Actual Fine-Tuning

Next, I needed to fine-tune. I started the training process. I tweaked some settings, like how many rounds it should run for. I set the learning rate to start with. Basically, I played around a bit until it felt right.

I kept an eye on it as it was running, checking to see if it was actually improving or just, you know, messing up. I changed parameter, and watched it get better (or sometimes worse, whoops!).

Checking the Results (Did It Even Work?)

Once the training was done, I had to see if all that effort actually resulted in something useful. I used my test set to put my newly fine-tuned model through its paces.

I won’t bore you with the technical details, but I used some standard checks to see how well it was performing. It took a bit of back and forth, trying different things, and re-running the training until I started seeing some good results. I learned you gotta adjust, train, test, repeat. Gotta put in the work!

And… that’s pretty much it! It wasn’t as scary as I initially thought. I’m still experimenting and trying to get even better results. I might try some different settings next time. It’s all a learning process, really. Keep practicing, right?